A security investigation has uncovered a concerning trend: nearly two-thirds of the world’s most prominent AI companies have accidentally leaked sensitive credentials on GitHub.

These exposures include API keys, authentication tokens, and other critical secrets that could give attackers direct access to their systems.

Researchers studied 50 leading AI companies from the Forbes AI 50 list and found that 65% had exposed verified secrets.

These companies are worth over $400 billion combined, making this security gap particularly serious.

The leaked credentials weren’t just sitting in active repositories; many were hidden in deleted forks, old code branches, and personal developer accounts.

How Secrets End Up Exposed

Modern secret leaks operate like an iceberg. The obvious risks include credentials accidentally committed to public repositories.

But deeper problems exist beneath the surface. Deleted repository forks keep their complete commit history, making old secrets permanently accessible to anyone who finds them.

Automated workflow logs often contain deployment credentials, and developer personal accounts frequently harbor organizational secrets that were committed and forgotten.

This layered exposure creates multiple attack vectors that standard scanning tools miss entirely.

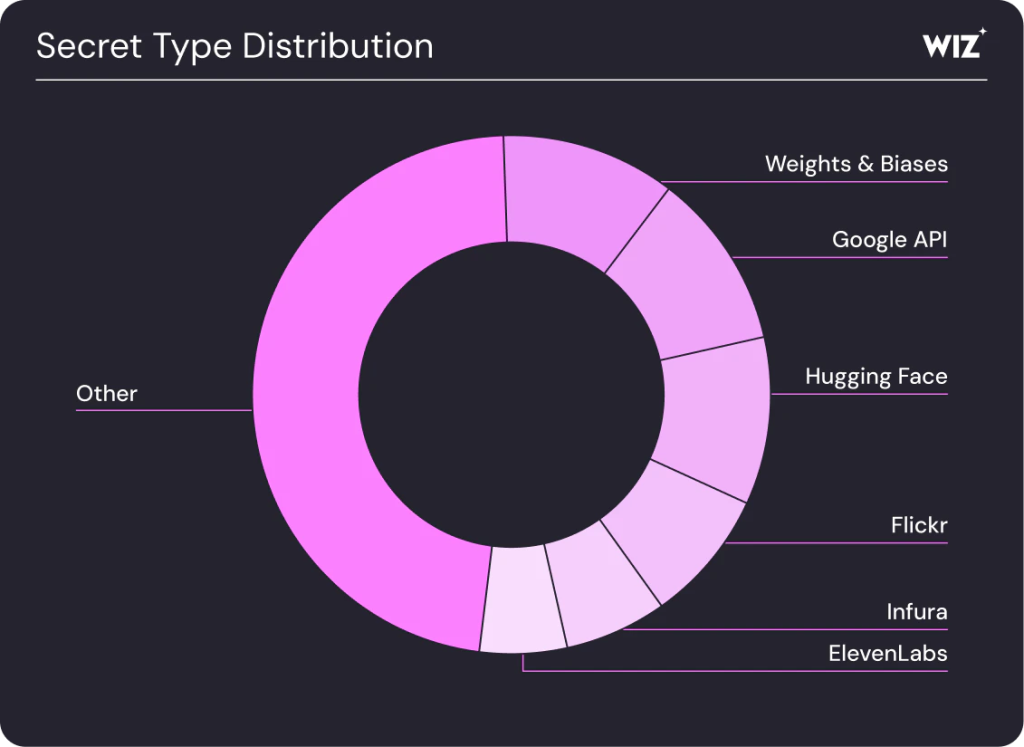

The exposed credentials grant access to some of AI companies‘ most valuable assets. Leaked WeightsAndBiases tokens exposed training data for private machine learning models.

HuggingFace authentication tokens provided access to thousands of private model repositories.

ElevenLabs API keys and LangChain credentials gave attackers gateway access to proprietary systems and sensitive information.

Beyond immediate technical risks, these leaks revealed organizational structures, team member lists, and internal relationships, valuable information for social engineering attacks.

The research revealed an important fact: one AI company maintained 60 public repositories with 28 organization members and had zero exposed secrets.

This proves that solid secrets management genuinely works.

Companies like LangChain and ElevenLabs quickly acknowledged and fixed disclosed vulnerabilities.

However, nearly half of the reported leaks either failed to reach their targets or received no response. Many startups lack official security disclosure channels.

AI organizations need three immediate actions: deploy mandatory secret scanning across all public code repositories, establish proper security disclosure channels from the beginning, and work with the security community to ensure detection tools support emerging secret formats.

The AI revolution depends on innovation and speed, but that future becomes worthless if the innovations themselves become compromised. For every AI company, securing secrets must keep pace with its advancing capabilities.

Find this Story Interesting! Follow us on Google News, LinkedIn and X to Get More Instant Updates