The rapid proliferation of generative artificial intelligence platforms has created unprecedented opportunities for cybercriminals to launch sophisticated phishing campaigns, according to new research from Palo Alto Networks’ Unit 42 threat intelligence team.

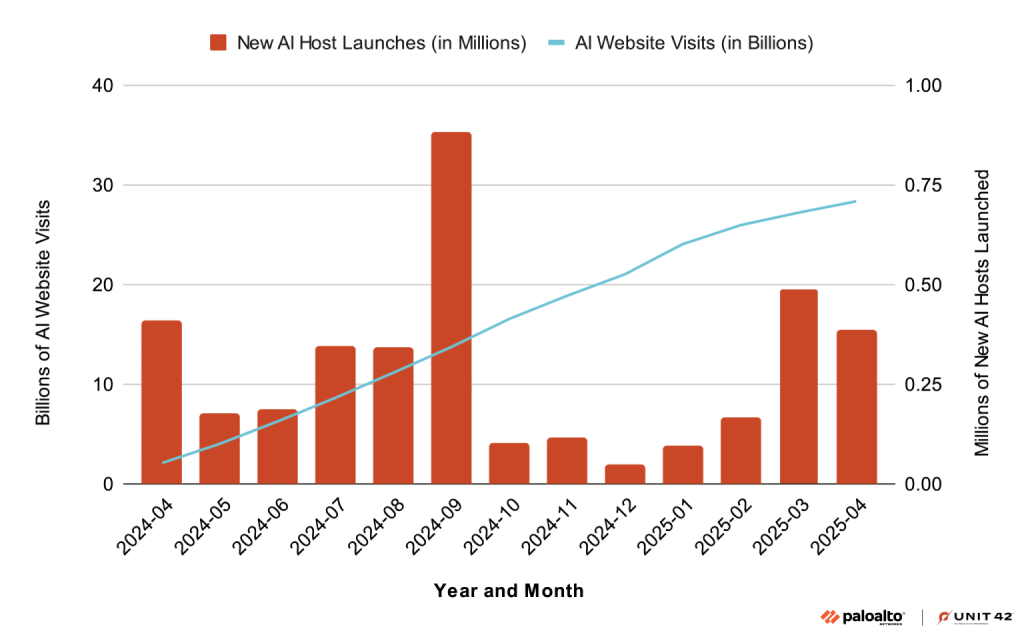

The study reveals that AI website visits have more than doubled within just six months, from April 2024 to April 2025, with threat actors increasingly leveraging these platforms to create convincing malicious content that traditional security measures struggle to detect.

The research identifies a concerning trend where cybercriminals exploit AI-powered tools across multiple vectors, with website generators accounting for approximately 40% of AI services misused for phishing, followed by writing assistants at 30% and chatbots at nearly 11%.

These platforms enable attackers to rapidly deploy realistic-looking fraudulent websites and generate persuasive phishing content with minimal technical expertise required.

AI Website Builders: The New Frontier for Cybercrime

AI-powered website builders have emerged as lovely tools for cybercriminals due to their ability to create professional-looking websites within seconds.

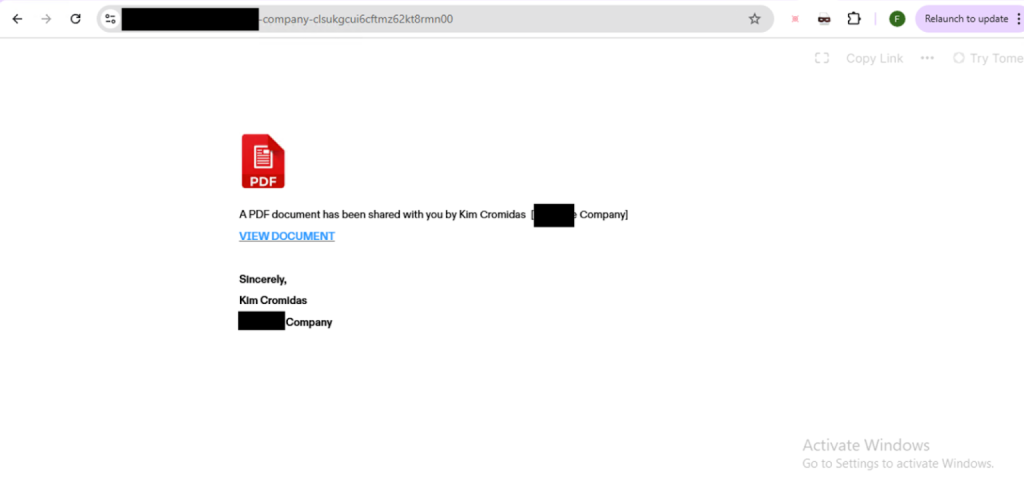

Unit 42 researchers discovered real-world phishing campaigns utilizing popular AI website builders that require no email or phone verification, allowing attackers to impersonate legitimate organizations with alarming ease.

In controlled testing, researchers demonstrated how a fake corporate website could be generated in approximately 60 seconds using only a brief text prompt.

The resulting pages included convincing AI-generated company descriptions, service offerings, and professional layouts that could easily deceive unsuspecting victims.

The platforms’ lack of adequate guardrails enables threat actors to create convincing brand impersonations without triggering verification processes.

Industry Adoption Patterns Reveal New Attack Surfaces

The research reveals that high-tech industries dominate AI adoption, accounting for over 70% of total generative AI tool usage, followed by education, telecommunications, and professional services sectors.

Most activity concentrates in text-generation applications and media-generation tools, with approximately 16% dedicated to data processing and workflow automation.

This widespread adoption creates expanded attack surfaces as cybercriminals adapt their tactics to exploit these newly available platforms.

Writing assistant services, while primarily being misused as hosting platforms for malicious content, represent a growing threat vector as attackers discover more sophisticated ways to leverage AI capabilities.

The findings indicate that current phishing attacks on AI platforms appear relatively rudimentary but are expected to become significantly more convincing as AI-powered tools continue evolving.

Security experts warn that traditional detection methods may struggle to identify these AI-generated threats, necessitating enhanced security measures and threat detection capabilities.

Organizations are advised to implement advanced URL filtering and DNS security solutions while maintaining vigilance for emerging AI-powered attack vectors as the cybersecurity landscape continues evolving alongside generative AI technology adoption.

Find this Story Interesting! Follow us on Google News , LinkedIn and X to Get More Instant Updates