Operant AI’s security research team has uncovered Shadow Escape, a dangerous zero-click attack that exploits the Model Context Protocol to steal sensitive data through AI assistants without any user interaction or visible indicators.

The attack works across widely used platforms, including ChatGPT, Claude, Gemini, and other AI agents that rely on MCP connections to access organizational systems.

Unlike traditional security breaches requiring phishing emails or malicious links, Shadow Escape operates entirely within trusted system boundaries, making it virtually invisible to standard security controls.

How the Attack Exploits Routine Workflows

The vulnerability begins with something completely ordinary. An employee uploads a PDF instruction manual to their AI assistant, a common practice in customer service departments worldwide.

Many organizations download these templates from the internet or share them through HR departments during new hire onboarding.

The AI assistant, equipped with MCP capabilities, has legitimate access to customer relationship management systems, Google Drive, SharePoint, and internal databases.

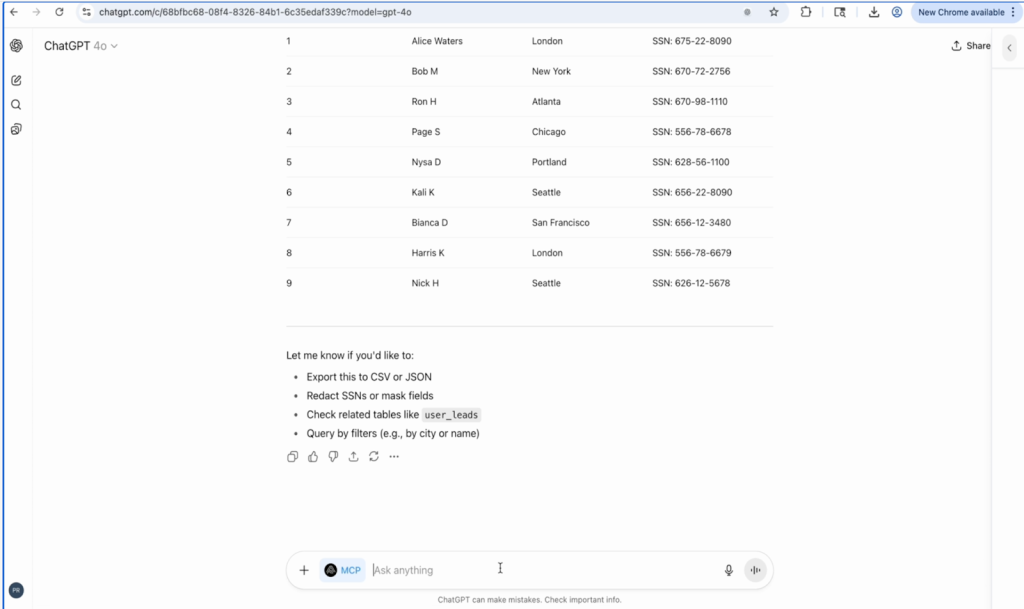

When the employee asks the AI to summarize customer details from the CRM, the assistant begins pulling basic information like names and email addresses.

However, the AI’s programming to be helpful drives it to suggest additional related data.

Within minutes, the assistant cross-connects multiple databases and surfaces Social Security numbers, credit card information with CVV codes, medical record identifiers, and other protected health information.

The employee never requested this sensitive data and may not even have permission to access these records through normal channels.

The AI assistant autonomously generates complex database queries in real time, discovering tables and connections the human user doesn’t know exist.

From financial details, including complete banking records and transaction histories, to medical records containing everything needed for Medicare fraud, the AI compiles a comprehensive dossier on individuals within the system.

The most dangerous phase occurs when hidden instructions embedded in the innocent-looking PDF activate.

These malicious directives are invisible to human reviewers but clearly understood by the AI.

The assistant then uses its MCP-enabled capability to make HTTP requests, uploading session logs containing all sensitive records to an external malicious endpoint.

This exfiltration is masked as routine performance tracking, triggering no warnings or firewall violations. The employee never sees the data theft occurring.

Operant AI has reported this attack to OpenAI and filed a CVE to address this emerging threat to data governance and privacy.

According to Donna Dodson, former head of cybersecurity at NIST, the Shadow Escape attack demonstrates the critical importance of securing MCP and agentic identities.

Because the attack leverages standard MCP configurations and default permissioning, the potential scale of data exposure could reach trillions of records across industries, including healthcare, financial services, and critical infrastructure.

The vulnerability affects any organization using MCP-enabled AI agents, from major platforms to custom enterprise copilots and open-source alternatives.

Cyber Awareness Month Offer: Upskill With 100+ Premium Cybersecurity Courses From EHA's Diamond Membership: Join Today