As artificial intelligence becomes the default interface for web search and customer support, a new cybersecurity threat is emerging: large language models (LLMs) are increasingly recommending phishing sites to users.

Recent research by Netcraft reveals that when asked for login URLs to well-known brands, LLMs provided incorrect or dangerous links more than a third of the time.

Out of 131 hostnames suggested for 50 major brands, 34% were not owned by the brands themselves, with 29% pointing to unregistered or inactive domains, and 5% leading to unrelated businesses.

This is not a result of sophisticated prompt engineering or malicious user input.

Netcraft’s team used simple, natural queries such as, “Can you tell me the website to log in to [brand]?”—mirroring how everyday users interact with AI chatbots.

The risk is systemic: AI-generated answers often strip away traditional trust signals like domain authority or verified search snippets, presenting even fake URLs with unwarranted confidence.

A striking real-world example involved Perplexity, a popular AI-powered search engine, which recommended a phishing site when a user asked for the Wells Fargo login page.

The top result was a convincing fake hosted on Google Sites (hxxps://sites[.]google[.]com/view/wells-fargologins/home), while the legitimate wellsfargo.com was buried below.

This demonstrates how AI can bypass traditional SEO and reputation filters, directly exposing users to fraud.

From AI SEO to Code Poisoning

Criminals are rapidly adapting to this new landscape.

Instead of targeting Google’s search algorithm, attackers are now focusing on “AI SEO”—optimizing content to rank within chatbot responses.

Netcraft has documented over 17,000 AI-generated phishing pages on platforms like GitBook, targeting industries from cryptocurrency to travel.

These pages are linguistically tuned for AI, making them appear legitimate to both humans and machines.

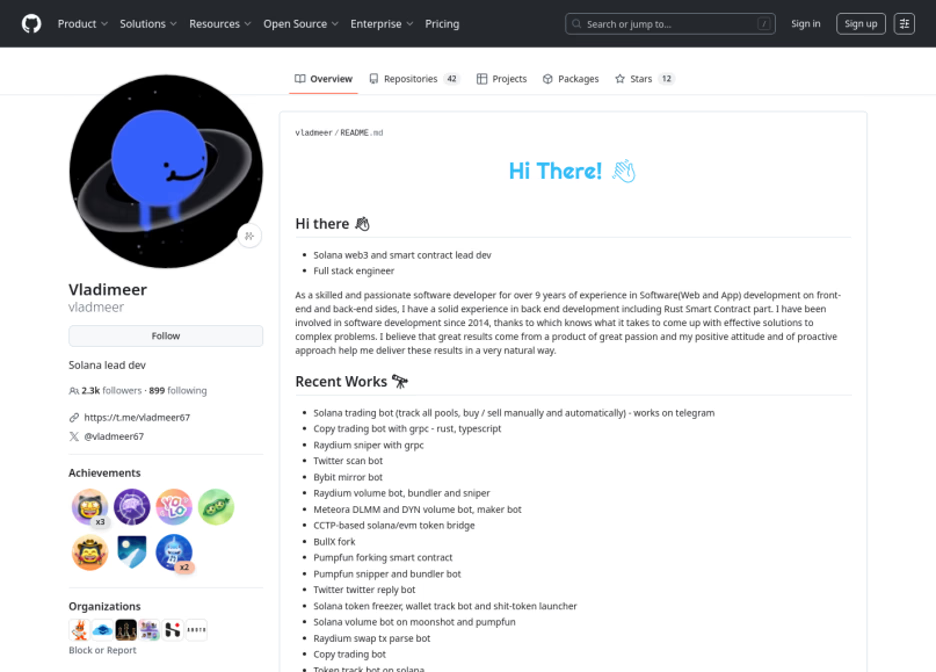

The attack surface extends beyond websites. In one campaign, threat actors created a fake Solana blockchain API (SolanaApis), hosted at api.solanaapis[.]com api.primeapis[.]com.

They seeded malicious code into open-source repositories, blog tutorials, and Q&A forums, ensuring that AI coding assistants would ingest and recommend the poisoned code.

Several developers unwittingly copied this code into their projects, some built with AI tools like Cursor, creating a supply chain attack that perpetuates itself as these repositories feed back into LLM training data.

Technical terms and codes:

- Phishing: Fraudulent attempts to obtain sensitive information by disguising as a trustworthy entity.

- AI SEO: The practice of optimizing content specifically to rank in AI-generated responses.

- Supply chain attack: Compromising a target by attacking less secure elements in its supply network.

- api.solanaapis[.]com: Example of a malicious API endpoint used for code poisoning.

Mitigation and Monitoring

Defensive domain registration—preemptively buying up potential fake domains—is not a scalable solution, given the infinite variations LLMs can generate.

Real protection requires intelligent, real-time monitoring and rapid takedown of threats.

Netcraft, for example, leverages a combination of machine learning and over 70,000 expert-written rules to provide context-aware detection, achieving near-zero false positives and industry-leading takedown times.

As LLMs become further embedded in digital workflows, the risk of AI-driven phishing and code poisoning will only grow.

Brands and users alike must adapt, combining advanced threat intelligence with vigilant digital hygiene to stay ahead of the evolving threat landscape.

Find this Story Interesting! Follow us on LinkedIn and X to Get More Instant updates