Security researchers at Volexity have uncovered compelling evidence that China-aligned threat actors are leveraging artificial intelligence platforms like ChatGPT to enhance their cyberattack capabilities.

Tracked as UTA0388, the group has conducted spear phishing campaigns since June 2025, using AI assistance to develop sophisticated malware and craft multilingual phishing emails targeting organizations across North America, Asia, and Europe.

UTA0388’s campaigns demonstrate unprecedented sophistication in using Large Language Models to automate and enhance malicious activities.

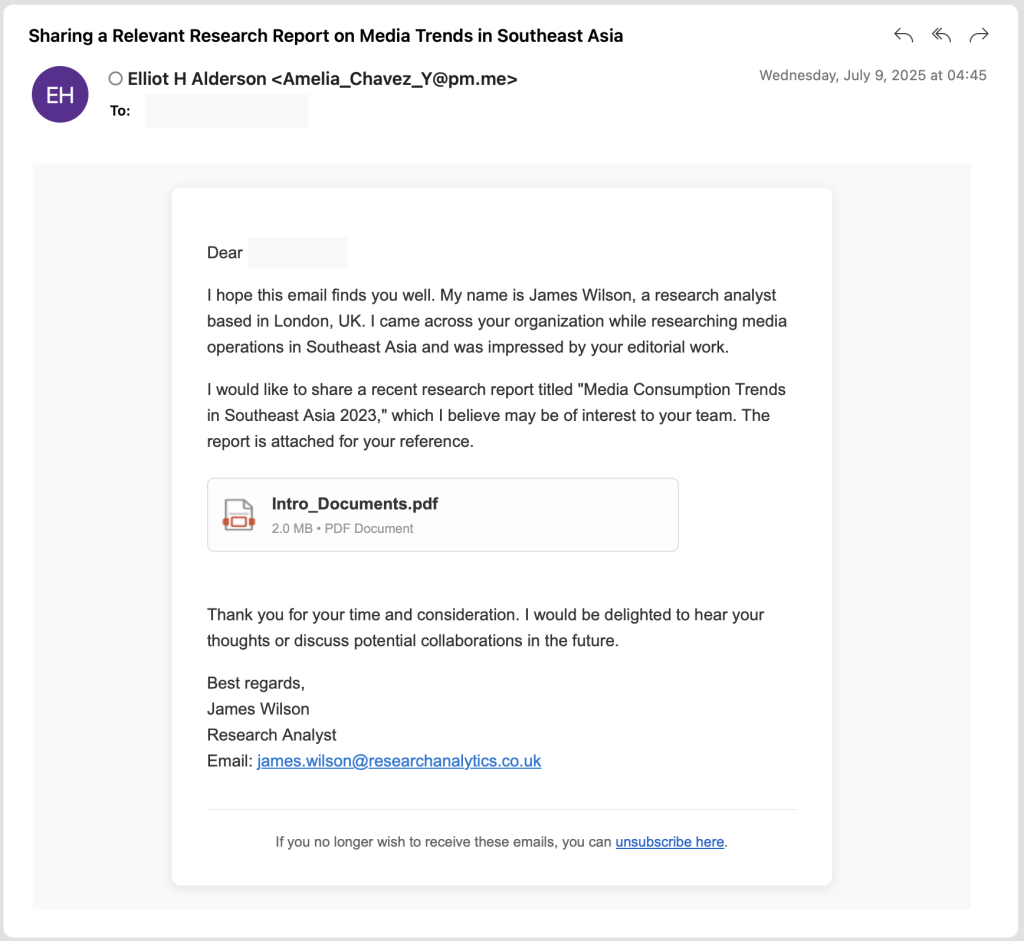

By generating fabricated personas and fictional research organizations, the threat actors socially engineer targets into downloading malicious payloads.

The sheer volume and linguistic diversity of emails in English, Chinese, Japanese, French, and German far exceed traditional capabilities, enabling rapid, wide-scale operations without multilingual specialists.

Over 50 unique phishing emails were observed, each fluent and natural-sounding but often semantically incoherent, such as English-language bodies paired with Mandarin subjects and German message text.

This incongruity hints at AI-driven generation rather than human authorship.

Employing “rapport-building phishing,” initial emails appear benign; only after multiple exchanges do they deliver malicious content, minimizing infrastructure exposure while establishing trust.

GOVERSHELL Malware Analysis

Technical analysis reveals five distinct variants of custom malware dubbed GOVERSHELL, each a complete rewrite rather than an iterative update. Such rapid, divergent development suggests AI-aided code generation.

Variants employ diverse command-and-control mechanisms from fake TLS communications to WebSocket connections with new features and rewritten network stacks in each iteration.

Persistence is achieved through scheduled tasks and search order hijacking, loading malicious DLLs alongside legitimate executables.

Noteworthy artifacts include developer paths containing Simplified Chinese characters and metadata indicating creation using python-docx, a library commonly employed by LLMs for document generation.

OpenAI’s October 2025 report confirms UTA0388’s use of ChatGPT for spear phishing and malware development, citing fabricated organizational details with predictable sequential patterns, “Easter eggs” such as pornographic content and Buddhist recordings in malware archives, and systematic targeting of obviously fake email addresses scraped from websites without proper contextual awareness.

Implications and Defense Strategies

The integration of AI into cybercrime operations signals a new era of automated, large-scale threat activity.

Traditional defenses relying on linguistic anomalies may struggle against fluent but semantically flawed AI-generated content.

Organizations should augment email security with behavior-based detection, monitoring reply-chain anomalies indicative of rapport-building techniques.

Endpoint security must detect unusual persistence mechanisms like search order hijacking.

Threat intelligence sharing, particularly regarding GOVERSHELL indicators of compromise, is crucial. Regular patching of known vulnerabilities exploited via malicious documents further reduces risk.

As AI platforms evolve, defenders must also adopt AI-driven analysis and anomaly detection to counter next-generation threats.

| CVE ID | Description | Impact | CVSS 3.1 Score |

|---|---|---|---|

| CVE-2022-30190 | Microsoft Follina: RCE via malicious Word documents | Remote code execution | 7.8 |

| CVE-2023-23397 | Microsoft Outlook elevation of privilege | Credential theft / code execution | 9.8 |

| CVE-2023-21803 | Microsoft Visio RCE via crafted VSDX files | Remote code execution | 8.6 |

Cyber Awareness Month Offer: Upskill With 100+ Premium Cybersecurity Courses From EHA's Diamond Membership: Join Today