Microsoft has announced expanded Data Loss Prevention (DLP) capabilities to restrict Microsoft 365 Copilot from processing emails with sensitivity labels, addressing growing concerns about AI-driven data risks.

The feature, tied to Microsoft 365 Roadmap ID 489221, will enter public preview in June 2025 and reach general availability (GA) by August 2025.

This update ensures sensitive emails sent on or after January 1, 2025, are excluded from Copilot’s response summarization and grounding processes.

Overview of the New DLP Feature

The enhancement allows organizations to apply existing or new DLP policies to block Copilot from referencing emails marked with labels like Highly Confidential or Personal in chat interactions. Key aspects include:

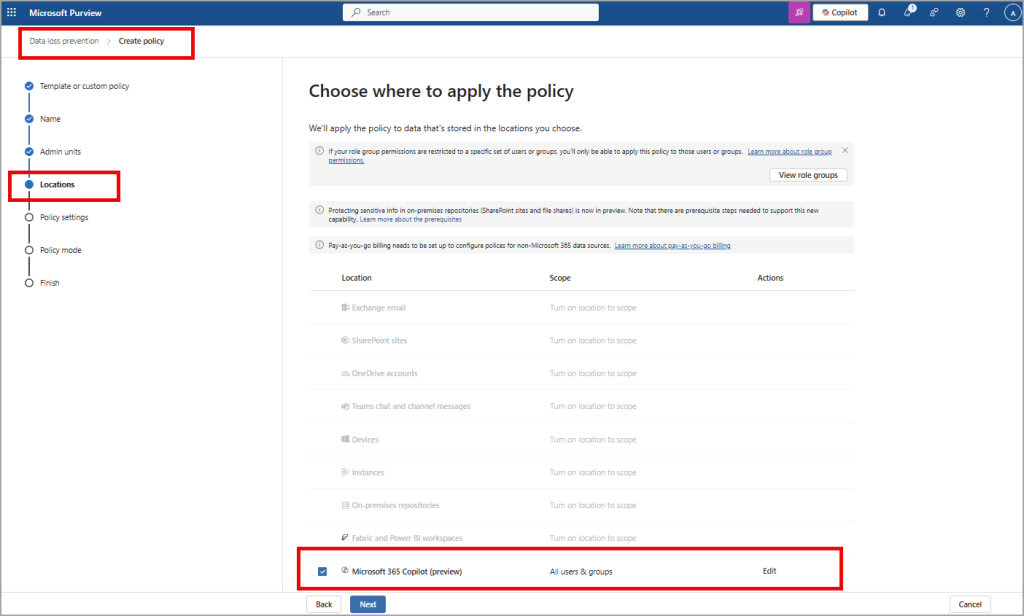

- Automated policy extension: Tenants with pre-existing DLP policies at the Microsoft 365 Copilot (preview) location will see these policies automatically apply to emails without admin intervention.

- Limited preview capabilities: Alerts, audit logs, and policy simulation tools are unavailable during the preview phase.

- License flexibility: A Microsoft 365 Copilot license is not required to configure or enforce these policies.

Administrators can create rules using the Content contains > Sensitivity labels condition in the Microsoft Purview portal, leveraging Microsoft’s Data Security Posture Management for AI (DSPM for AI) for centralized oversight.

Technical Implementation and Integration

The DLP update integrates with Microsoft’s broader information protection framework:

- Sensitivity label enforcement: Copilot checks for VIEW and EXTRACT rights before processing labeled content, ensuring compliance with encryption settings.

- For example, a document with Highly Confidential encryption will be excluded from summaries unless users have explicit access.

- Cross-workload support: While initial DLP policies for Copilot focused on SharePoint and OneDrive, the new feature extends coverage to Exchange Online emails.

- Automated inheritance: New content generated by Copilot inherits the highest-priority sensitivity label from source materials, maintaining consistent protection.

Testing methodologies, such as those documented by Office 365 IT Pros, highlight how labeled documents are silently excluded from Copilot search results, leaving no trace of restricted data.

Risk Factors and Mitigation Strategies

| Risk Factor | Mitigation Strategy |

|---|---|

| Data leakage via AI summarization | Apply sensitivity labels with encryption and configure DLP policies to block Copilot access. |

| Compliance violations | Use DSPM for AI to identify unlabeled sensitive data and generate label policies. |

| User disruption | Train teams on Copilot’s updated behavior using Microsoft Mechanics tutorials10. |

| Policy misconfiguration | Test policies in audit mode pre-deployment and monitor via Purview activity logs. |

This update reinforces Microsoft’s commitment to secure AI adoption, enabling organizations to balance productivity gains with robust data governance.

IT teams should review sensitivity label taxonomies and leverage Purview’s Conditional Access integrations to preempt oversharing risks.

As Copilot’s capabilities expand into apps like Word and Excel, proactive DLP configuration will be critical to maintaining compliance in generative AI workflows.

Find this Story Interesting! Follow us on LinkedIn and X to Get More Instant Updates